In today’s fast-paced data-driven world, businesses face increasingly complex workflows that need seamless coordination. Enter Apache Airflow—an open-source tool designed to simplify the process of creating, scheduling, and monitoring batch workflows. With Airflow, organizations can automate their data pipelines and ensure tasks are executed smoothly, thanks to its flexible Python-based framework.

Airflow stands out with its ability to integrate across different technologies, making it a go-to solution for managing workflows. Whether you’re orchestrating data pipelines or scheduling task execution, Airflow provides a user-friendly interface to help you stay on top of everything.

In this blog post, we’ll explore what makes Airflow a powerful tool for workflow management, its key components, and how it can help streamline complex business processes. Let’s dive in!

What is Airflow?

Apache Airflow is an open-source tool developed to address the growing complexity of business workflows, enabling the creation, scheduling, and monitoring of batch workflows. With its extensible Python framework, Airflow allows for the seamless integration of workflows across various technologies. It provides key functionalities, such as orchestrating data pipelines, ensuring tasks are executed according to their dependencies, and offering a user-friendly interface for monitoring and managing task statuses.

Airflow’s notable features include its scalability, which adapts to varying workloads; dynamic nature, enabling the creation of data pipelines using Python; and extensibility, allowing for integration with different technologies through operators. The intuitive user interface further simplifies the detection and resolution of errors within data pipelines, providing clear insights into which tasks or operators encountered issues.

The main components of Airflow

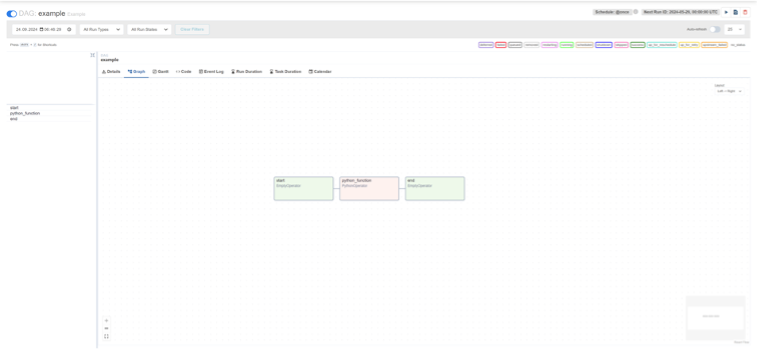

The Directed Acyclic Graph (DAG) is the central component of Apache Airflow. It represents a collection of tasks that are executed in a specific order, based on their dependencies. Each DAG defines the structure of a workflow and ensures tasks are executed in sequence without circular dependencies.

A task is a single unit of work within a DAG, representing an individual step in the workflow. Operators are predefined building blocks in Airflow that define what each task does, such as executing a Python function, running a bash command, or interacting with databases or external services.

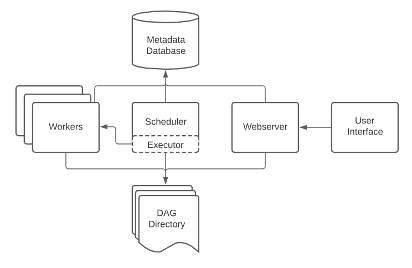

Airflow Architecture

Apache Airflow’s architecture is built on a modular and scalable design, consisting of several key components:

- Scheduler: The Scheduler is responsible for determining when tasks are ready to run, based on their dependencies and scheduling intervals. It triggers task execution within DAGs (Directed Acyclic Graphs).

- Executor: The Executor manages task execution, deciding where and how tasks should be run, either locally or distributed across multiple workers, depending on the chosen execution backend (e.g., Celery, LocalExecutor, Kubernetes).

- Metadata Database: Airflow stores metadata, such as DAG definitions, task states, and job information, in a relational database (e.g., MySQL, PostgreSQL).

- Web Server (UI): The Web Server provides a user-friendly interface for monitoring DAGs, inspecting task status, and managing workflows.

- Worker(s): Workers execute the tasks assigned by the scheduler. These are independent processes that can be scaled based on the workload.

This architecture allows Airflow to efficiently orchestrate and manage workflows across a variety of environments.